Determinants and parallelepipeds

Connecting Geometry and Algebra

Matrix determinants have the following strange definition that seems to have been pulled out of thin air:

$$

det(A) = \sum_{\sigma}{sign(\sigma)a_{1\sigma(1)}...a_{n\sigma(n)}}

$$

where

- $A = (a_{ij})$ is a real $n\times n$ matrix

- $\sigma$ ranges over all permutations of $\{1,...,n\}$

- $sign(\sigma)$ is $+1$ if $\sigma$ is a product of an even number of transpositions and $-1$ otherwise$^\dagger$

The determinant of A is the volume of the parallelepiped formed by its columns, multiplied by minus one if these have the opposite handedness to the unit vectors.Why are these two definitions the same? To begin to answer this we need to first define elementary matrices and then show that every square matrix can be written as a product of these.

Definition

The elementary matrices are- $E_{i, j}$ for $1 \le i,j \le n$

- $E_{i,\lambda}$ for every real $\lambda$ and $1 \le i \le n$

- $E_{i,j,\lambda}$ for every real $\lambda$ and $1 \le i,j \le n$ and $i\ne j$

- $E_{i,j}$ is $I$ with the $i^\text{th}$ and $j^\text{th}$ rows swapped

- $E_{i,\lambda}$ is $I$ with the one in the $(i,i)^\text{th}$ position replaced by $\lambda$

- $E_{i,j,\lambda}$ is $I$ with the zero in the $(i,j)^\text{th}$ position replaced by $\lambda$

Lemma

Every real square matrix is a product of elementary matrices.Proof

Suppose you have a square $n\times n$ matrix $A = (a_{ij})$. We can transform it into an upper triangular matrix$^{\dagger^2}$ using the following algorithm

1 2 3 4 5 6 7 8 9 10 11 12 13 14 15 16 17 18 19 20 21 | def make_upper_triangular(A): # Iterate through first N-1 diagonal elements of A for i in range(N-1): # Skip A[i,i] if entries below it are already zero if set(A[i+1:,i]) == { 0. }: continue # Swap row i with a row below it if A[i,i] is zero if A[i,i] == 0.: j = min({ k for k in range(i+1,N) if A[k,i] != 0. }) A[(i,j),:] = A[(j,i),:] # Scale row i to make A[i,i] equal 1. A[i,:] *= 1./A[i,i] # Subtract multiple of row i from other rows to get unit column for j in range(N): if j != i: A[j,:] -= A[j,i] * A[i,:] |

Note that the operations on lines 13, 16, and 21 are equivalent to pre-multiplying $A$ by an elementary matrices $E_{i,j}$, $E_{i,\lambda}$, and $E_{j,i,\lambda}$ respectively. Thus we end up with a sequence $E_i$ of elementary matrices and an upper triangular matrix $U$ such that

$$

E_k...E_1A = U

$$

However, there is a similar algorithm for making a matrix lower triangular whose operations are equivalent to post-multiplying by elementary matrices$^{\dagger^3}$. If you perform this algorithm on an upper triangular matrix $U$ you get a sequence of elementary matrices and a diagonal matrix$^{\dagger^4}$:

$$

UE'_{k'}...E'_{1} = D

$$

But a Diagonal matrix is just a product of elementary matrices of the form $E_{i,\lambda}$, so

$$

D = E''_{k''}...E''_{1}

$$

Putting these all together and observing that the inverses of elementary matrices are elementary matrices gives the result.

Lemma

For any $n\times n$ matrices $A$ and $B$ we have $det(AB) = det(A)det(B)$Proof

First we need to show that the statement is true if $A$ is an elementary matrix. This is simple since- $E_{i,j}B$ is just $B$ with the $i^\text{th}$ and $j^\text{th}$ rows swapped, so it's determinant can be obtained by replacing indices $b_{k,\sigma(k)}$ in the definition of $det(B)$ by $b_{k,\sigma\tau(k)}$ where $\tau$ is the transpose of $i$ and $j$. But $sign(\sigma\tau) = - sign(\sigma)$. So

\begin{align}

det(E_{i,j}B) &= -det(B) \\

&= det(E_{i,j})det(B)

\end{align}

$$

- $E_{i,\lambda}B$ is just $B$ with the $i^\text{th}$ row scaled by $\lambda$. Therefore every term in its determinant is just the corresponding one in the determinant of $B$ multiplied by $\lambda$. So

\begin{align}

det(E_{i,\lambda}B) &= \lambda det(B) \\

&= det(E_{i,\lambda})det(B)

\end{align}

$$

- $E_{1,j,\lambda}B$ is just $B$ with a multiple of the $j^\text{th}$ row added to the $1^\text{st}$ row. So its determinant is $\sum_{\sigma}{sign(\sigma)(b_{1\sigma(1)}+\lambda b_{j\sigma(1)})b_{2\sigma(2)}...b_{n\sigma(n)}}$ which expands to the sum of $det(B)$ and $\lambda det(B')$ where $B'$ is just $B$ with the first row replaced by a copy of the $j^\text{th}$ row. But the determinant of a matrix with two identical rows is zero. So

\begin{align}

det(E_{1,j,\lambda}B) &= det(B) \\

&= det(E_{1,j,\lambda})det(B)

\end{align}

$$

$$

\begin{align}

det(AB) &= det(E_1...E_kE_1'...E_{k'}')\\

&=det(E_1)...det(E_k)det(E_1')...det(E_{k'}')\\

&=det(A)det(B)

\end{align}

$$

Theorem

The determinant of a matrix $A$ is the quantity $V(A)$ whose absolute value is the volume of the parallelepiped formed by its columns, and whose sign is +1 iff it has the same handedness as the parallelepiped formed by the columns of the identity matrixProof

First we need to show that if $E$ is an elementary matrix and then for any $B$$$

V(EB) = det(E)V(B)

$$

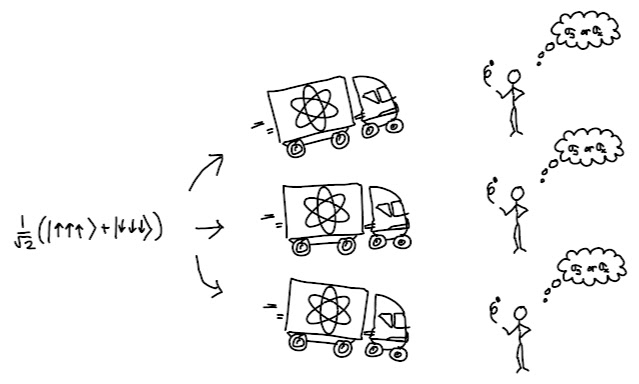

The transforms corresponding to each type of elementary matrix are illustrated above and is straightforward to check:

- $E_{i,j}$ just changes the handedness, and $det(E_{i,j}) = -1$

- $E_{i,\lambda}$ stretches by $\lambda$ in the $i^{th}$ direction, and $det(E_{i,\lambda}) = \lambda$

- $E_{i,j,\lambda}$ skews but does not change base lengths, heights or volumes, and $det(E_{i,j,\lambda}) = 1$

$$

A = E_k...E_1I

$$

Then

$$

\begin{align}

V(A) &= det(E_k)V(E_{k-1}...E_2I) \\

&=det(E_k)...det(E_1)V(I)\\

&=det(E_k)...det(E_1)\\

&=det(A)\\

\end{align}

$$

Consequences

We know already that the statement that $A$ is non-invertible is equivalent to $det(A) = 0$, but this theorem provides us with an intuitive understanding why. Non-invertability is equivalent to the claim that there's a column vector $\mathbf{y}$ such that $A\mathbf{x} = \mathbf{y}$ has no solution. This makes sense if the parallelepiped has zero volume as it means all the columns are in the same hyperplane!It also explains the following formula in differentiable geometry, where $z^i$ are arbitrary curvilinear coordinates, where $\mathbf{z_i} = \frac{\partial \mathbf{R}}{\partial z^i}$ are the covariant basis vectors, and where $z_{ij} = \mathbf{z_i}\cdot \mathbf{z_j}$ is the so called metric tensor

$$

Vol = \int_{z^1}...\int_{z^n} \sqrt{det(Z)} dz^1...dz^n

$$

The $\sqrt{det(Z)}$ is the volume of the parallelepiped formed by the covariant basis $\mathbf{z_i}$ because if we define $A = \left(\mathbf{z_1}|...|\mathbf{z_n}\right)$ then

$$

\begin{align}

V(A) &= det(A) \\

&=\sqrt{det(A)det(A)} \\

&=\sqrt{det(A^T)det(A)} \\

&=\sqrt{det(A^TA)}\\

&=\sqrt{det(Z)}

\end{align}

$$

And finally, a test...

The following matrix has zero determinant. In fact so does any $n\times n$ matrix built from any consecutive integers, provided $n \gt 2$. Why?$$

\begin{bmatrix}1 & 2 & 3 & 4 \\

5 & 6 & 7 & 8 \\

9 & 10 & 11 & 12 \\

13 & 14 & 15 & 16 \\

\end{bmatrix}

$$

Footnotes

- ($\dagger$) It's a theorem in group theory that every permutation is always a product of an even number or an odd number of transpositions, but never both.

- ($\dagger^2$) A matrix whose elements $a_{ij}$ are zero if $i \gt j$

- ($\dagger^3$) Just swap row and column indices in the algorithm shown

- ($\dagger^4$) You can easily prove this by induction on $i$ where the inductive statement is: after the $i^\text{th}$ step $A$ is still upper triangular and all non-diagonal entries in the first $i$ rows are zero.

Comments

Post a Comment